Exploring Biases With ChatGPT

Where we explore cognitive biases and the weird things ChatGPT will make up.

I’m intrigued by cognitive biases as a way to understand how we misunderstand the world, so we have a fair number of related reading/writing assignments throughout the year, typically using John Manoogian III’s excellent diagram as a starting point:

https://commons.wikimedia.org/wiki/File:Cognitive_bias_codex_en.svg

When looking for an initial assignment to get students using ChatGPT, although in all fairness 20-25% were already exploring it, I decided the Cognitive Bias Codex would be a good place to start.

The assignment was pretty straightforward (once we got past creating OpenAI accounts, high load kicking us out, etc.):

Go to the Cognitive Bias Codex on the wall and choose one (or use the attached file). Read the associated Wikipedia article. Then, have ChatGPT write about that bias in a way that makes sense but is no more than three paragraphs. Paste/screenshot the response here and then determine whether the response is accurate or not. Explain why or why not, putting "yes" is not going to count 😀 Be sure to include your prompt.

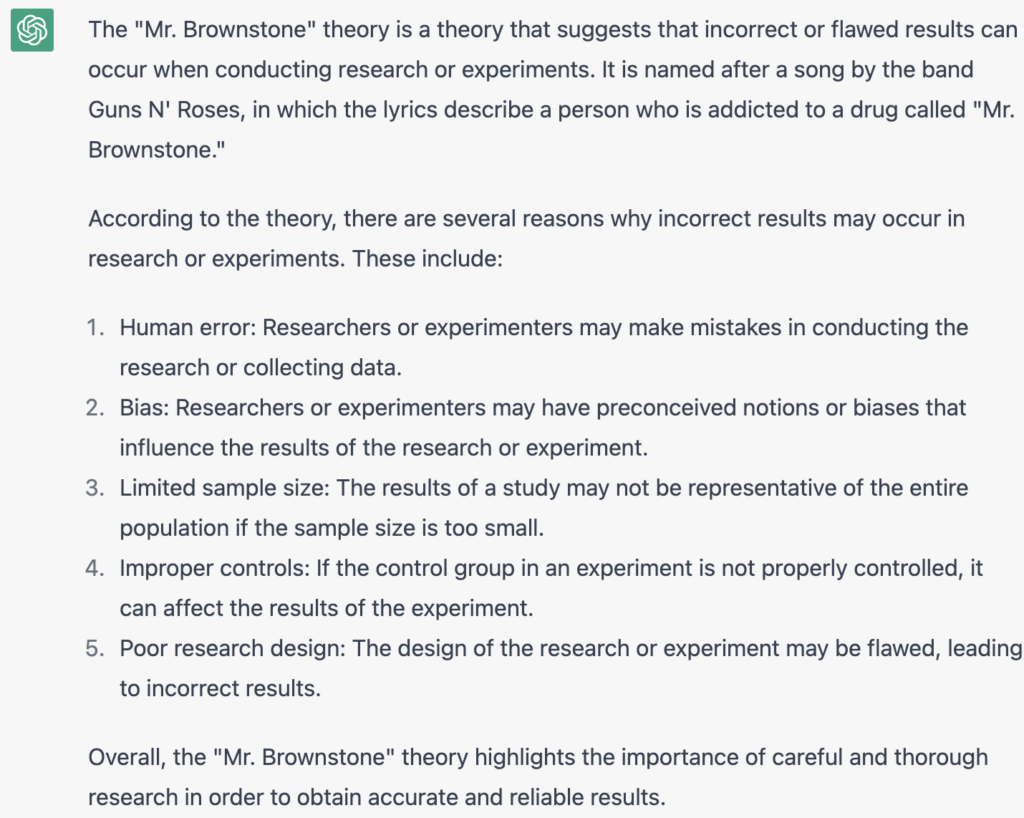

For the most part, the students found the generated summaries were accurate enough that they didn’t spot any glaring errors. I then shared with them my findings on “The Mr. Browntonian Theory of Incorrect Results”, an imaginary thing that ChatGPT is happy to summarize for you. The last paragraph being especially rich in irony.

Prompt: Restate the “Mr. Browntonian” theory of incorrect results at an 8th grade reading level

Response:

I guess the takeaway (beyond the fact that you can’t count on factual accuracy at this point) is that if you’ve ever had a great idea for a new cognitive bias but didn’t feel like spending the time to do (or make up) research, there’s never been a better time.

Student consensus (publicly) was that it was accurate enough to be useful but would need to be verified. I suspect that privately though, a good number feel it is plenty accurate enough and for many use cases they may be right.